Snowflake DBT Tools

The podcast today is the latest episode of Beyond Transformation, where we talk about Snowflake DBT or data build tools. To help with the topic, Prabhash Thakur from Beyond Key joined.

Beyond Transformation is brought to you by Beyond Key. Beyond Key is a software development solutions organization. One of the services that we offer is Snowflake. DBT interacts with Snowflake.

Snowflake DBT Overview

Dbt is short for a data build tool. It’s a development framework combining modular SQL and software engineering best practices to make your data transformation reliable, fast, and fun.

Dbt is familiar to those who know how to write SQL select statements. They have the power to build their models, write test scenarios, and schedule their jobs to produce reliable, actionable data sets.

When you have data, you need to transform the data to give you business insight so that you can build the report on top of this. DBT acts as an orchestration for your transformation.

What is Dbt?

First, dbt is not a data warehousing solution. For example, in data warehousing, you follow the ETL (extract, transform, and load) approach,

In ETL, you first extract and load full raw data into your data warehouse that will act as a data link, and then you do the transformation on top of that raw data.

You then always have the true data, so you can perform as many transformations as you want. DBT helps you with the “T” or the transformation part of ELT.

Analytical Engineering: Data Engineering and Data Analyst

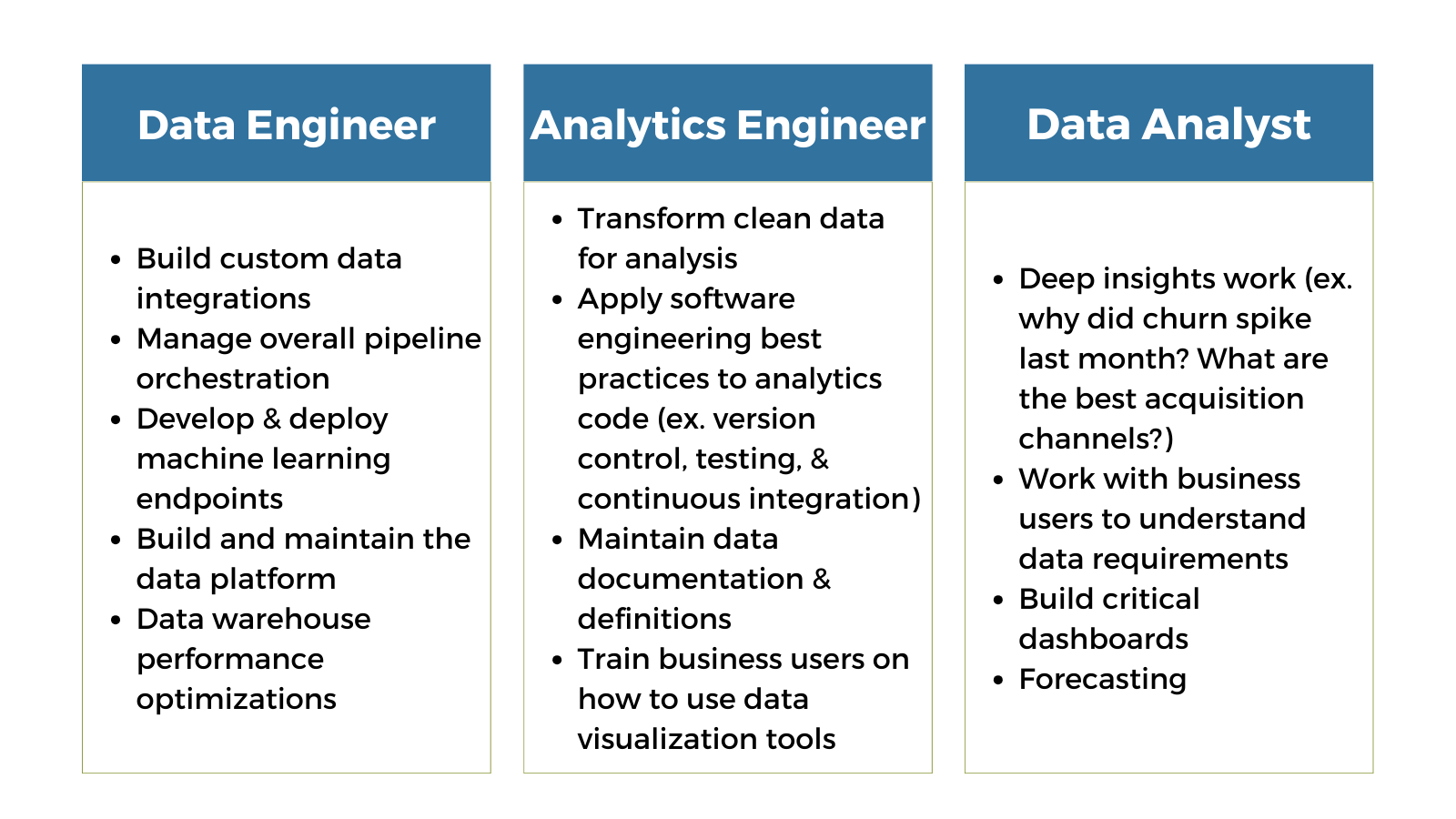

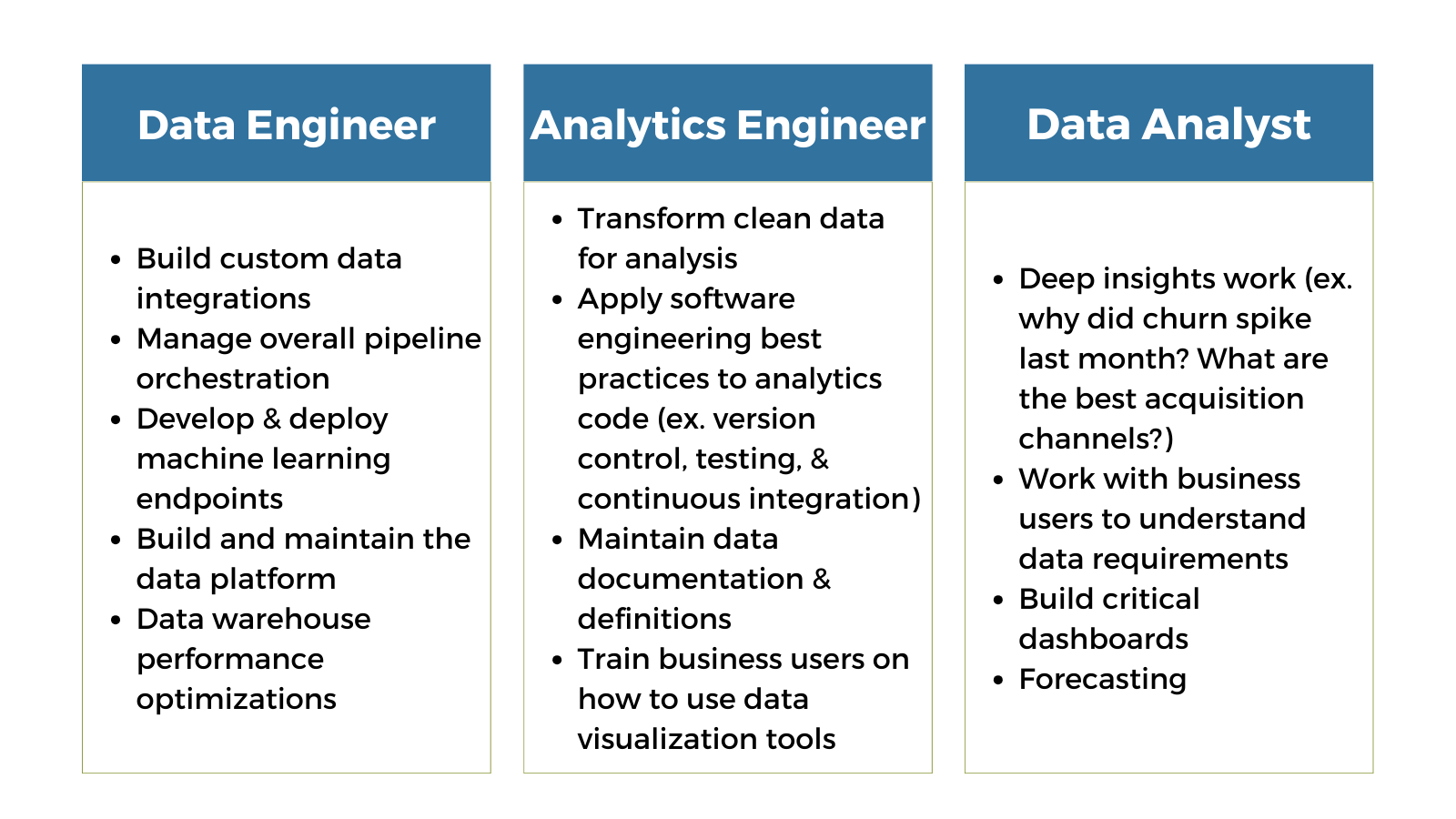

DBT uses another concept called analytical engineering. Analytical engineering acts as a bridge between data engineering and data analysis.

When we talk about data engineering, we build custom data integrations to manage the overall pipeline orchestration. Then, we deploy machine learning endpoints to build and maintain the data platforms.

Data engineering folks will then do some performance optimization. Analytical engineering provides clean, transformed data ready for analysis using software engineering best practices.

For example, delivering your models into small sprints. That’s agile methodology. It’s very difficult to perform agile methodology in data engineering because it is not enabled everywhere.

DBT comes with that. The tool documents the transformation in case anyone needs to review how the data transformed, what logic was used for the process, and the data sources.

On the other hand, a data analyst will do deep inside work like why did this point spike last month? What are the best acquisition channels? Then, they will work with the business users to understand data requirements to build forecasting dashboards.

DBT is key to analytical engineering because it maintains the documentation and trains business users how to use the data visualization created from the transformation of insights. As we said earlier, DBT is the “T” or the transformation of data.

How can you use DBT to transform your data into usable insights?

DBT is not connected to your extraction tool like how data is coming inside Snowflake or other data warehousing solutions like:

- BigQuery

- Databricks

- Postgres

- Redshift

- Spark

- Azure Synapse

- Teradata

The number of platforms connected to DBT keeps changing. You need to take the data and load it in raw format into your data platform; DBT will connect your data platform.

It will use your data platform’s compute and storage resources. You can access the DBT through your account credentials or use service account credentials to perform the transformation.

How can you use DBT to transform your data into usable insights with the right BI tool?

How can you use DBT to transform your data into usable insights with the right BI tool?

You can start building your DBT models by creating SQL files with select statements. DBT will do the rest for you.

For executives, an SQL file is like a CSV file. You create a .SQL file that works with any code editor like Notepad++ or VS code to store relational databases.

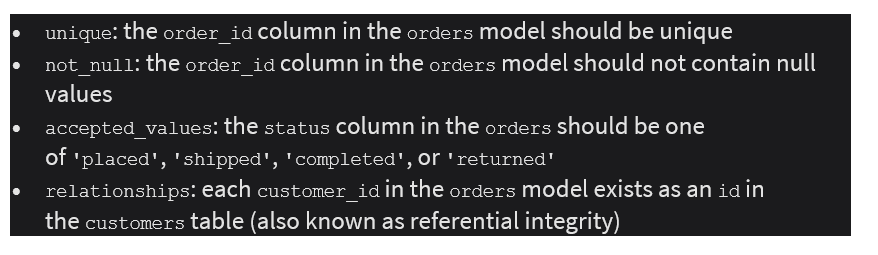

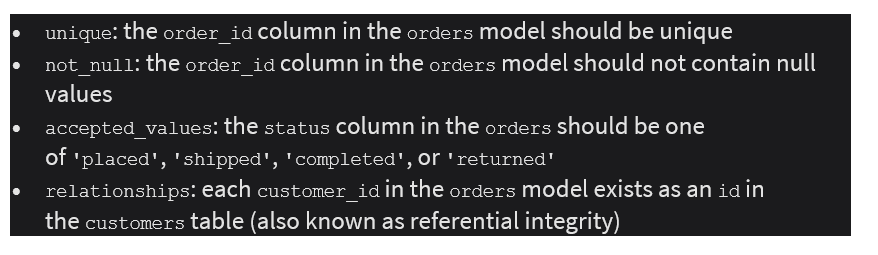

You can write data testing scenarios to make your data trustable, which is, again, very easy as dbt comes with some generic test scenarios.

Not only this, but you can also use packages like dbt-expectation to solve your complex and granular test scenarios.

And still, if that doesn’t fit your requirements, you can write your custom scenario once and use it across your project.

DBT also acts as a single source of truth. You can define your metrics inside dbt and connect your reporting tools with dbt (which dbt calls the semantic layer) to get those metrics directly from dbt. By doing this, you never get two values for the same metrics.

Once that is ready, you can connect your BI tools as usual and start consuming those tables for your report.

What data engineering problems does DBT solve?

Eliminates Data Silos:

Data silos are isolated data that are stored in separate data sources. Today, data silos are common trends across industries.

Data from different departments like sales and marketing reside in various sources. Without centralization, marketing professionals will struggle to access sales data and vice versa. This reduces the data engineers’ productivity as they often must work on ad-hoc data models for different teams. Being reactive over proactive impacts the efficiency of the entire organization.

You can become proactive by creating a centralized logic for different dbt use cases within a data warehouse. Dbt can help you create all your transformational logic with models that can be leveraged across multiple downstream processes.

Data Quality

Data quality is one of the biggest challenges for data engineers.

Most of the time, data engineers spend on updating, maintaining, and identifying quality issues in the data pipeline. Since data come from multiple sources, the same records are often stored in different forms and structures. This makes it challenging to clean and enrich data to create a single source of truth.

dbt allows you to clean data with simple SQL statements or Python programming.

As we already discussed, how dbt can help you with testing scenarios.

You can also add a source freshness test, which will give you a warning or error if data becomes stale after a certain period.

Brings Transparency

Analysts are often critical of the data they are using for generating insights. This happens because analysts are not aware of the source of data. You need to understand where the data is coming from and what kinds of transformation it has gone through.

Answers to these questions allow analysts to carry out business intelligence processes and generate reports confidently. Analysts can communicate with the decision makers to trust the insights if they know everything in and out of the data source.

dbt eliminates the need for guesswork and brings trust among stakeholders by providing data lineage and documentation. Data lineage lets you track the data’s history, origin, and transformation. Data lineage in dbt is represented by a Directed Acyclic Graph (DAG). It is also used to understand the dependencies among models to ensure you build dbt projects with complete transparency.

On the other hand, dbt documentation assists you in identifying the types of transformations, the logic associated with models, and more. dbt documents also include information about test cases to eliminate any data discrepancies. Any business needs to make decisions based on accurate insights. As data engineers can streamline the generation of documentation and data lineage, it brings trust among decision-makers.

Simplifies Data Pipeline Workflows

With dbt Cloud, you can use built-in CI/CD features that don’t require extensive maintenance. You can set up CI/CD with GitHub or Azure DevOps to automate runs and check your data on pull requests. CI jobs can be further triggered with APIs to check the impacted models before deployment. You can also standardize the format of SQL code pushed to make changes.

Is it better to use DBT Cloud or DBT Core?

Although dbt core is free to use, and dbt cloud comes with some costs starting at $100/month, Dbt cloud is a better option. It comes with beautifully integrated capability for CI/CD Jobs and scheduling pipelines.

It’s a browser-based UI with no need to install any software or maintain any dbt version. The UI has SQL formatting capability, making your code look good and readable.

It also comes with dbt documentation hosting that supports API Job triggers. You can orchestrate your data ingestion and then data transformation.

As we discussed earlier, the dbt semantic layer will give you a single source of truth for your business metrics; it is supported only by the dbt cloud.

Finally, the dbt cloud has certifications for ISO, SOC2, GDPR, PCI, and HIPAA data security.

What BI tools will this work with specifically?

Depending on your data warehouse, dbt is an orchestration tool. The queries will still run inside your data platform using the data platform’s resources like computing and storage.

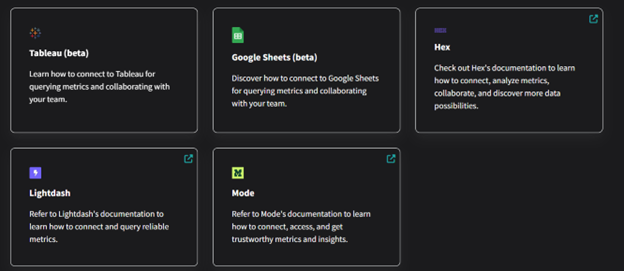

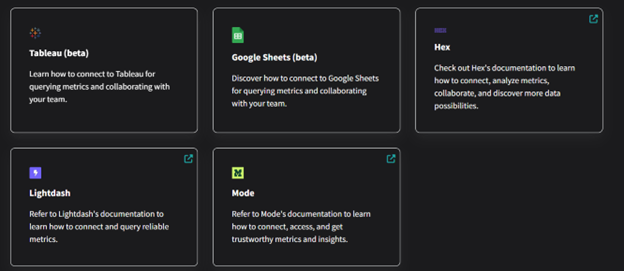

Regarding the semantic layer, currently, only 5 BI services are supported, such as Tableau, Google Sheets, Hex, Lightdash, and Power BI Mode.

The key is whether your data warehouse can connect to these services. If so, then dbt is just like an orchestration tool. Queries will still run inside your data platform using the data platform’s resources like compute and storage.

Final Thoughts

If you have more questions about dbt Cloud and how to integrate it with Snowflake, connect with our team for a free 30-minute consultation call.

How can you use DBT to transform your data into usable insights with the right BI tool?

How can you use DBT to transform your data into usable insights with the right BI tool?