Snowflake Integration: Effortless Data Mastery for Modern Businesses

Data is everywhere. But often, it’s like trying to find a matching sock in a teenager’s room—scattered, hard to access, and frustrating to deal with. Does your organization’s data feel the same? If yes, welcome to the club.

Snowflake Integration doesn’t just organize your data but turns it into actionable insights at lightning speed. And no, it’s not just another buzzword—it’s the game-changer your business needs.

Let’s talk about why Snowflake integration matters, what it can do for your business, and how it transforms the way you manage and utilize data.

What is Snowflake Integration?

Think of Snowflake Integration as the ultimate data connector—like the universal adapter for all your data needs.

Snowflake, a cloud-based data warehousing platform, integrates seamlessly with other tools, applications, and data sources, enabling you to:

- Migrate data from legacy systems into a modern, scalable solution.

- Set up ETL (Extract, Transform, Load) workflows to process and analyze data effortlessly.

- Power real-time analytics with continuous data streaming. Collaborate across teams and partners with secure data sharing.

In short, it’s not just about moving data—it’s about making data work for you.

Why Snowflake Integration is a Game-Changer?

True, you might already have processes to manage your data. But ask yourself: Are they fast? Scalable? Secure? Probably not.

Here’s why Snowflake integration outshines traditional systems:

- No More Data Silos: Snowflake breaks down the barriers between your systems, giving you a single, unified view of your data.

- Real-Time Insights:Forget waiting hours (or days) for reports. Snowflake’s architecture supports real-time data processing for instant decision-making.

- Boundless Scalability: Snowflake grows with your data—no expensive hardware, no performance bottlenecks.

- Automation at Its Best: From data ingestion to transformation, Snowflake automates repetitive tasks, freeing up your team for more strategic work.

Obviously, the choice is clear: Snowflake isn’t an upgrade. It’s a revolution for your data strategy. And if you want to know how to perform seamless integration, check here

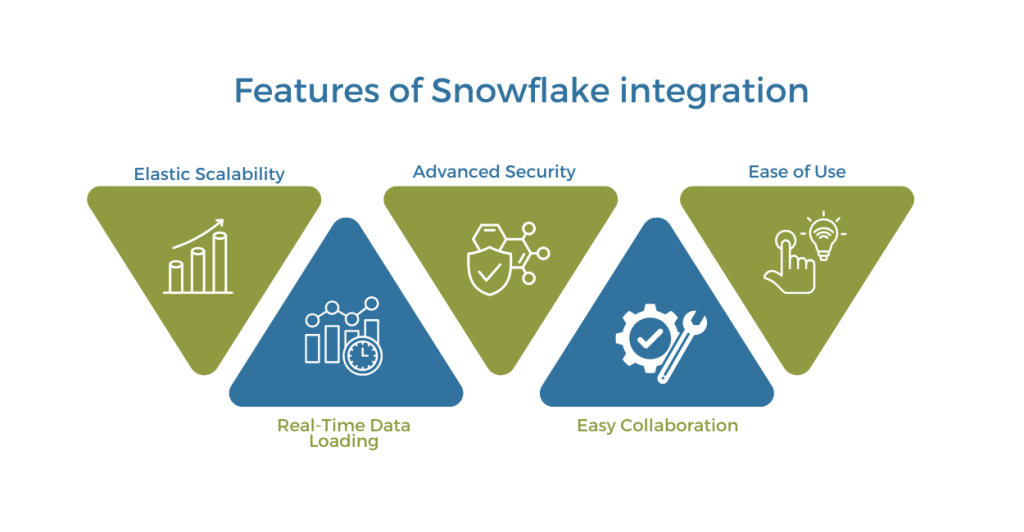

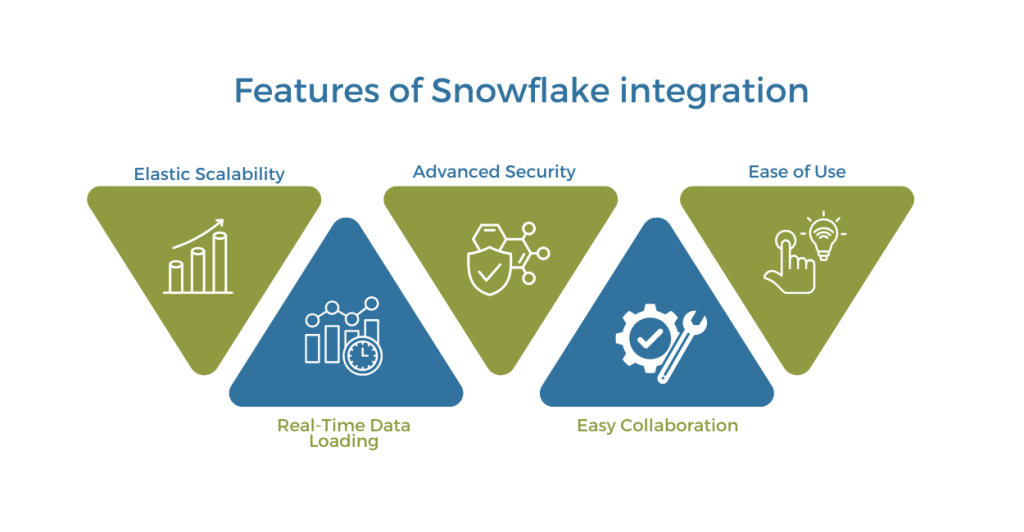

Key Features of Snowflake Integration

To truly appreciate what Snowflake can do, let’s dive into its most compelling features:

- Elastic Scalability: Scale up or down on demand without worrying about infrastructure. (Think of this as paying for electricity—you use what you need, when you need it.)

- Real-Time Data Loading: With tools like Snowpipe, Snowflake ensures your data pipelines stay up-to-date.

- Advanced Security: Industry-grade encryption, access control, and compliance features keep your data safe.

- Easy Collaboration: Share live data securely with internal teams or external partners without duplication.

- Ease of Use: Snowflake simplifies data management, even for non-technical users.

It’s like having a Swiss Army Knife for your data—but one that sharpens itself as you use it.

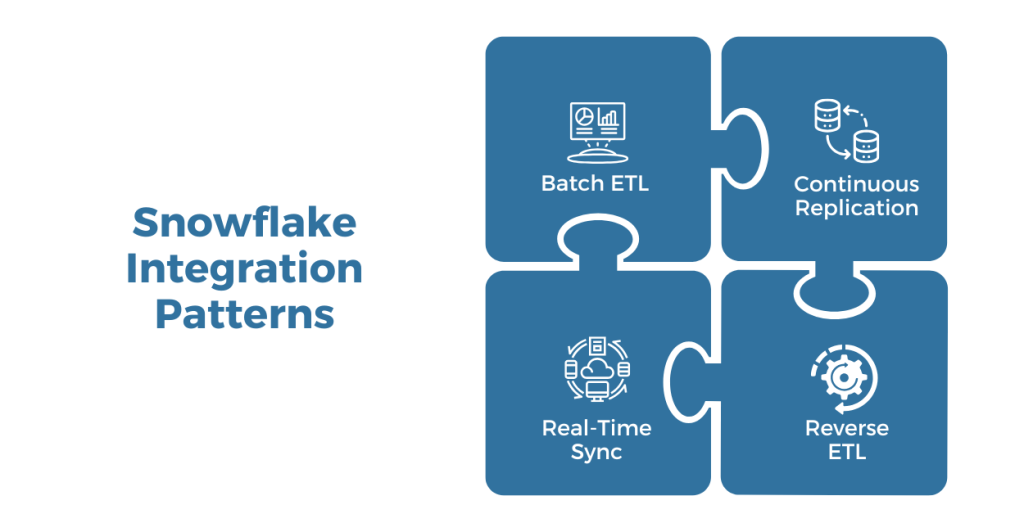

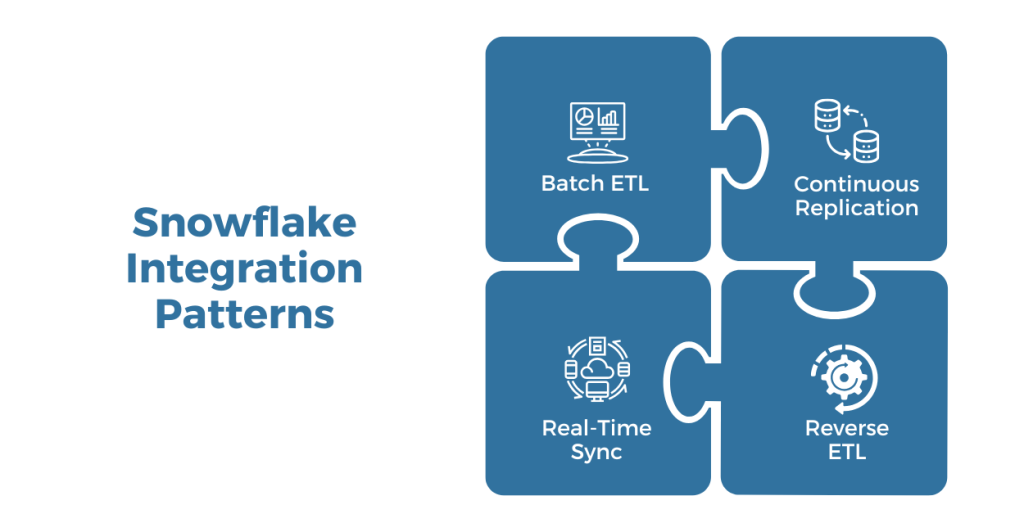

Snowflake Integration Patterns

Not all integrations are created equal. Depending on your needs, here are the most common ways businesses connect with Snowflake:

- Batch ETL : Ideal for handling large datasets periodically. Data is extracted, transformed, and loaded into Snowflake in bulk using tools like COPY or partner connectors. (Think of this as meal prepping—get everything ready in one go.)

- Continuous Replication :For real-time updates, Snowpipe and Change Data Capture (CDC) keep your pipelines synced as new data streams in. (No overnight waits, just instant updates.)

- Real-Time Sync : When speed is critical, Snowflake Streams and Tasks trigger workflows as soon as new data arrives. Perfect for fraud detection or live dashboards.

- Reverse ETL : Extract data from Snowflake and push it into downstream tools like CRMs or marketing platforms. This turns insights into action.

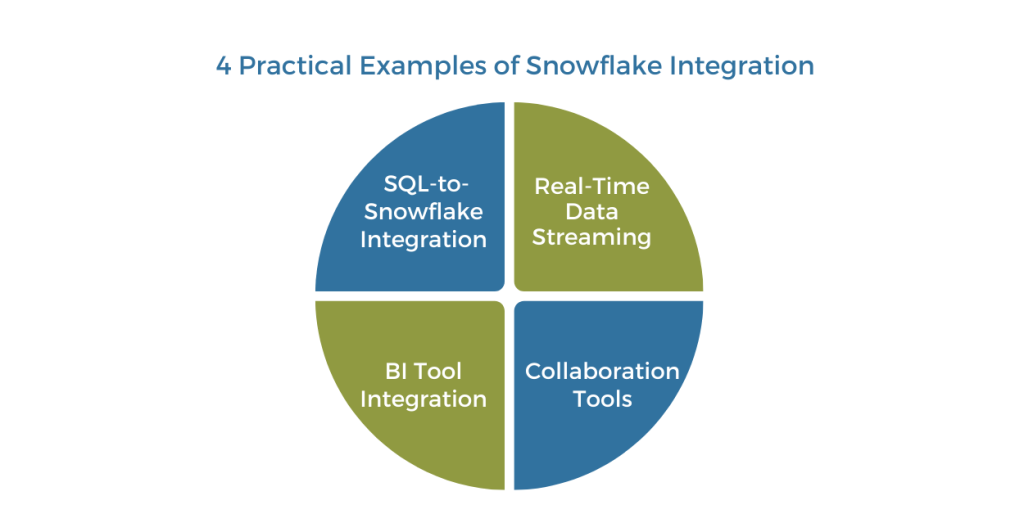

4 Practical Examples of Snowflake Integration

Let’s make this real. Here are four scenarios where Snowflake integration shines:

- SQL-to-Snowflake Integration : Easily migrate data from SQL servers into Snowflake for faster analytics and better scalability.

- Real-Time Data Streaming : Use tools like Kafka or AWS Kinesis to stream data into Snowflake for immediate processing.

- BI Tool Integration : Connect Snowflake with Tableau or Power BI for stunning data visualizations and powerful reporting.

- Collaboration Tools : Integrate Snowflake with platforms like Slack or Salesforce for seamless data sharing and governance.

And this is just scratching the surface. Snowflake supports integration across industries, from healthcare to retail to finance. Have a unique need? Snowflake can handle that too.

Business Benefits of Snowflake Integration

Here’s where it gets exciting—how Snowflake integration impacts your organization at every level:

At the Organizational Level

- Faster Decision-Making: Real-time analytics mean you’re always ahead of the curve.

- Cost Optimization: With pay-as-you-go pricing, you only pay for what you use.

- Streamlined Operations: Automation reduces manual effort, improving overall efficiency.

At the Business Level

- Enhanced Customer Experience: Personalize interactions with deeper, faster insights into customer behavior.

- Actionable Insights: Turn raw data into decisions that drive growth.

- Future-Proof Scalability: Snowflake adapts to your needs, whether you’re a startup or an enterprise.

In short, Snowflake doesn’t just make life easier—it helps you achieve your goals faster and more effectively.

Snowflake Integration in Action

To see Snowflake integration mechanisms in action, let us walk through a sample use case encompassing batch ETL orchestration and BI integration:

Our e-commerce company has product data in an on-premises MySQL database. The goal is to load this data into Snowflake and enable interactive reporting using Tableau. Here are the steps:

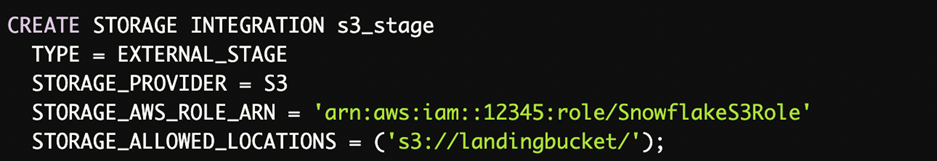

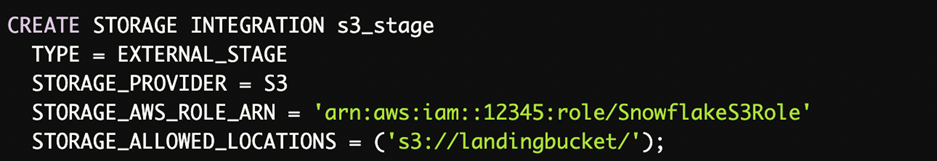

Step 1: Setting up storage integration to effectively manage and stage the data for better processing.

Step 2: Using an ETL tool like Informatica to extract data from MYSQL and then load the transformed JSON files right into the S3 staging area for further refining.

Step 3: Use copy command to replicate the data from S3 storage into Snowflake table.

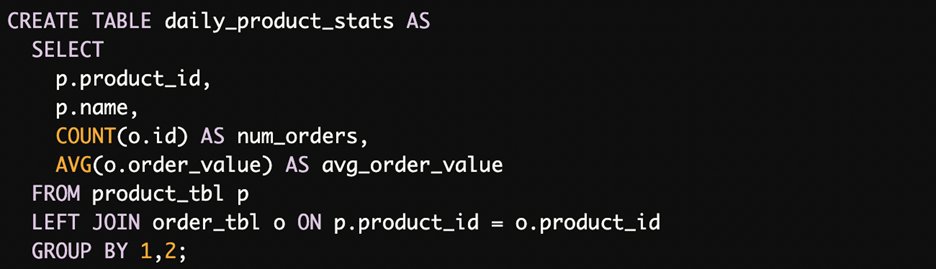

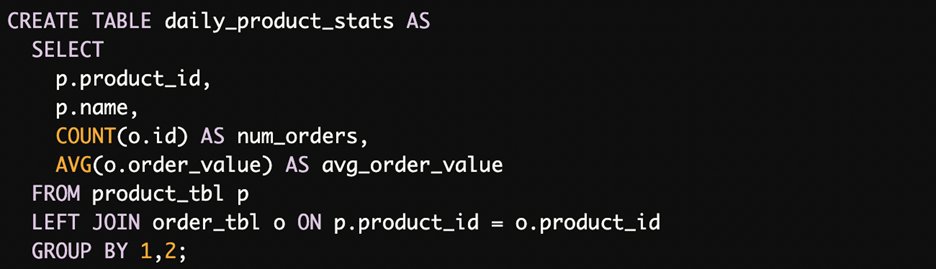

Step 4: If needed transform data using SQL queries on Snowflake table for shaping. For example:

Step 5: For getting real-time insights, integrate Tableau to Snowflake and make interactive dashboards to visualize daily.

Step 6: By scheduling load jobs with orchestration tools like Airflow, you can automate the ETL process.

This demonstrates a batch ETL pattern where Informatica pulls data from MySQL, loads it into Snowflake via S3 staging, followed by BI integration for analysis. The pipeline can be automated via orchestration tools.

Similarly, streaming or real-time patterns are possible by utilizing Snowpipe, Streams, Tasks etc. as per the use case requirements.

Deep Dive into Snowflake API Integration

In addition to loading and transforming data, Snowflake enables robust integration with external applications and services via its extensive set of open APIs. These REST APIs allow programmatically managing all aspects of the Snowflake account and platform.

The Snowflake API integrations provide:

- Authentication and access control for securely connecting applications to Snowflake

- APIs for administrative operations like managing users, warehouses, databases etc.

- Core data operations such as running queries, loading data etc.

- Metadata management capabilities for programmatic schema access and modifications

- Support for running ETL, orchestration, and data processing jobs

- Asynchronous operations like cloning, unsharing data etc.

- Monitoring APIs to track usage, query history etc.

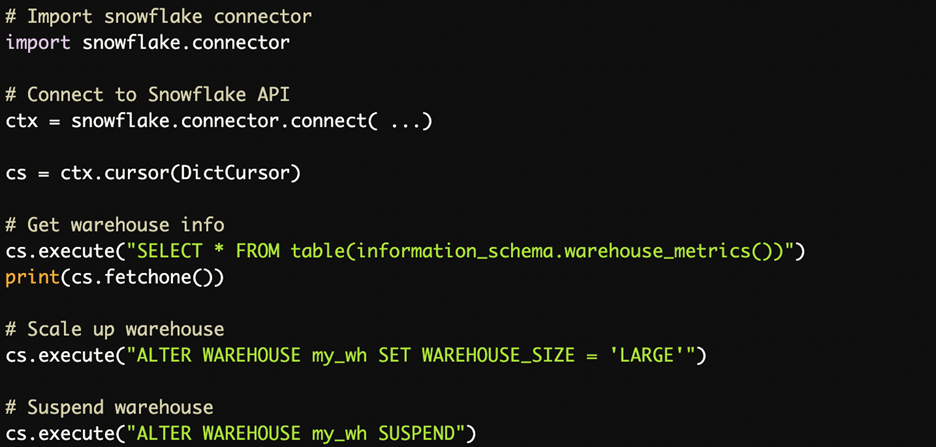

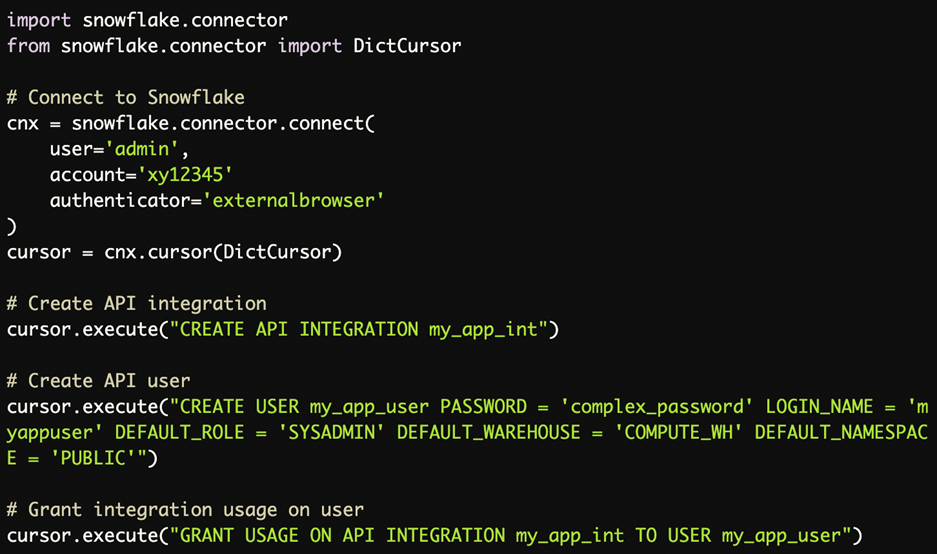

Let us look at some examples of using Snowflake APIs for key integration tasks:

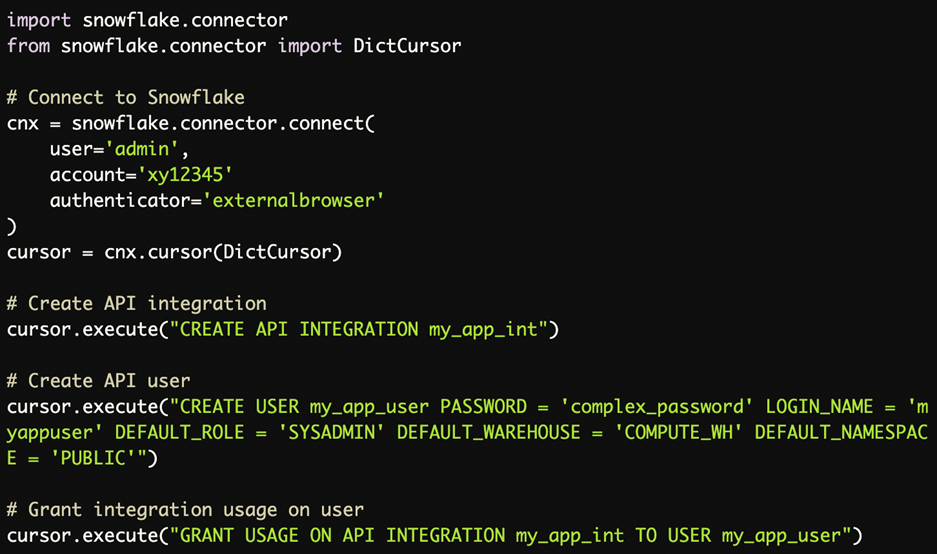

User and Access Management

This creates an API integration, API user and grants integration access to the user for application authentication.

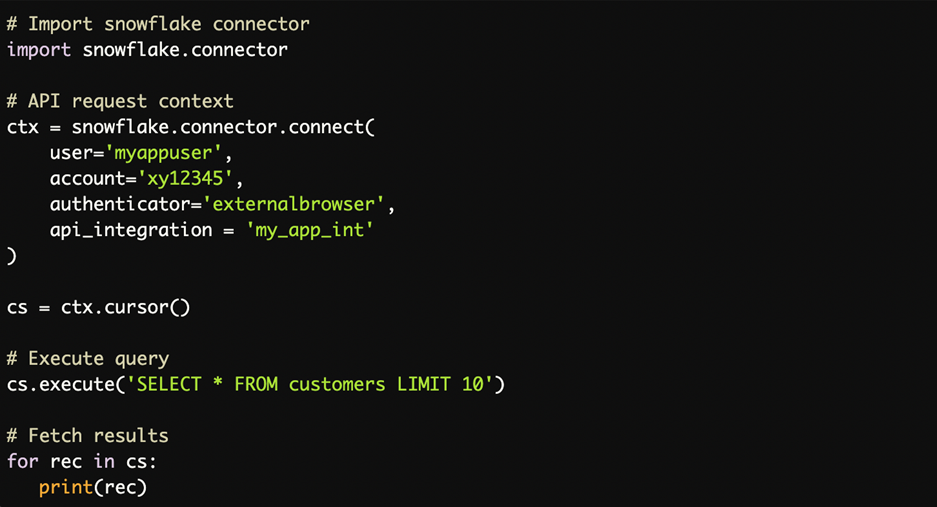

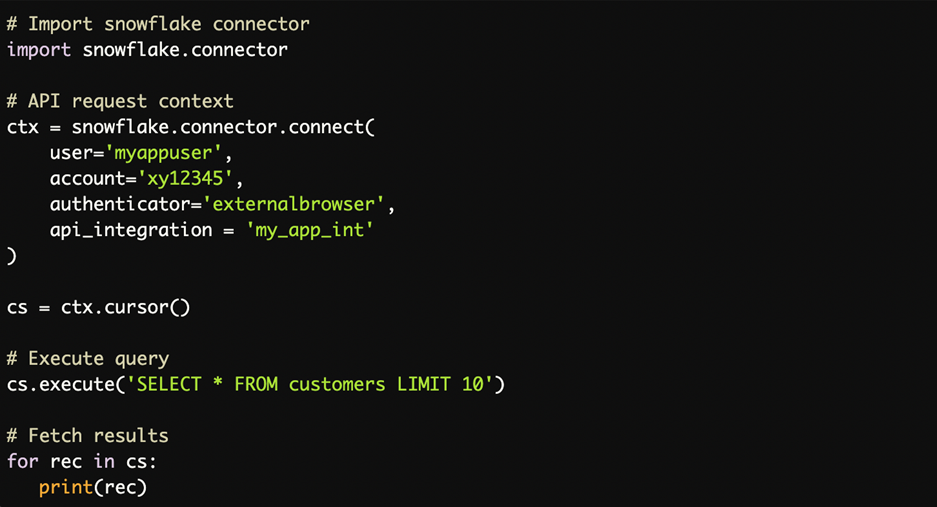

Query Execution

This connects an application to Snowflake API using the API user, and executes a sample query.

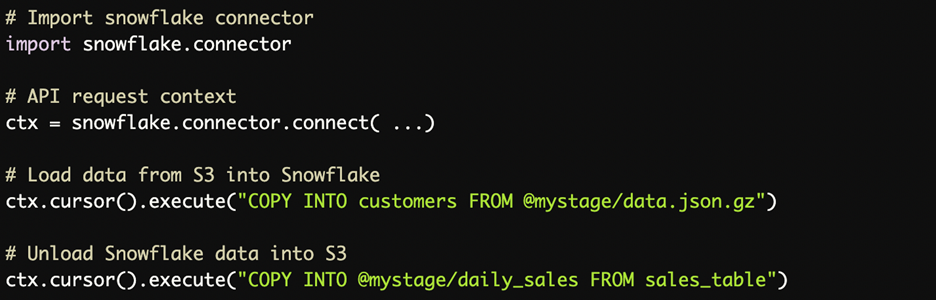

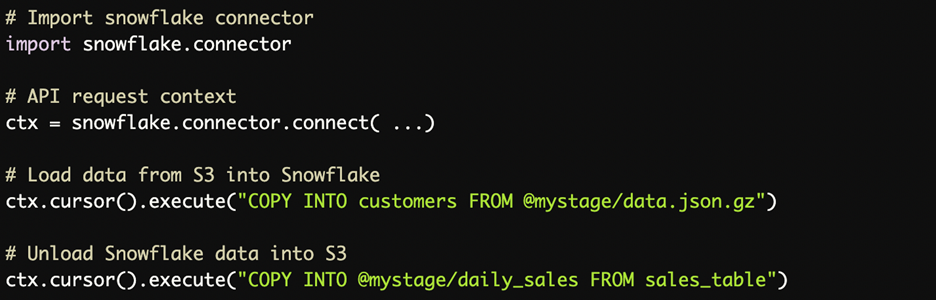

Loading and Unloading Data

This enables using Snowflake stage locations and COPY commands via API to load and unload data.

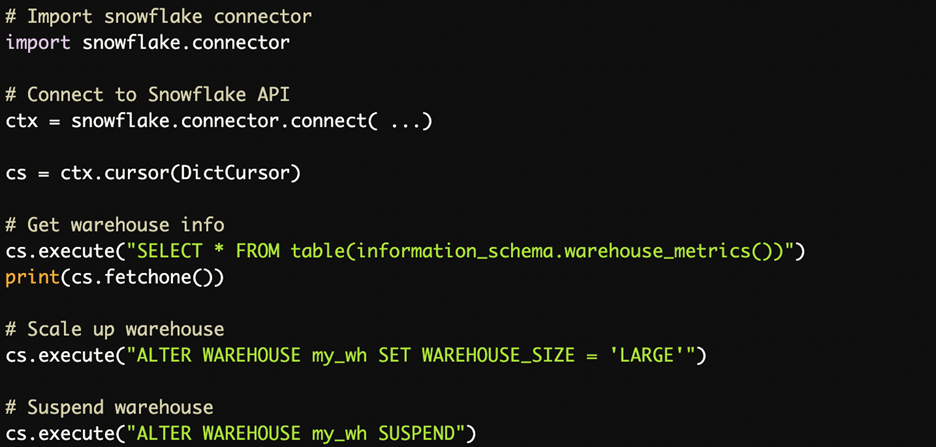

Monitoring and Administration

This illustrates how Snowflake APIs can be used for monitoring and administrative operations like scaling and suspending warehouses.

There are endless possibilities once you start harnessing the power of Snowflake APIs for programmatic automation and integration!

Strategies for Effective Snowflake API Integration

While Snowflake APIs open up many integration possibilities, here are some key strategies for maximizing effectiveness:

- Authenticate via OAuth or integrated identity providers for single sign-on. Never hardcode Snowflake credentials.

- Follow security best practices around key rotation, IP allowlisting, and access control. Protect API keys and tokens.

- Implement request validation, input sanitization and rate limiting on API requests to prevent abuse.

- Handle transient errors, throttling, and retry logic for resilience against failures.

- Set up API monitoring, logging, and alerts to track usage patterns and promptly detect issues.

- Provide SDKs and well-documented APIs for easy integration by developers.

- Support versioning to maintain backwards compatibility as APIs evolve.

- Containerize API workloads for portability across environments.

- Follow API-first design principles for consistent interfaces.

By following these strategies, you can build scalable and robust API-based integration with Snowflake’s data cloud.

Ready to Transform Your Data?

Snowflake integration isn’t just about connecting systems—it’s about unlocking the full potential of your data. And at Beyond Key, we specialize in making that happen seamlessly.

Whether you’re migrating data, setting up ETL workflows, or building real-time dashboards, our team brings the expertise to ensure your Snowflake journey is smooth and impactful.

Get in touch with us today for a free consultation. Your data deserves better—and we’re here to make sure it gets exactly that.

Data chaos is optional. Snowflake integration is the solution. And Beyond Key? We’re your partner in making it happen.

To learn more and get expert guidance tailored to your use case, check out Beyond Key’s Snowflake consulting services. Our team brings extensive real-world expertise to help you through any stage of your Snowflake journey – from planning and architecture to seamless deployment and ongoing enhancements. Get in touch for a free consultation.